Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Question Answer

A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

A)

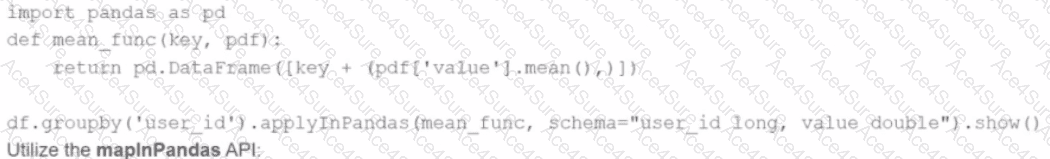

Use the applylnPandas API

B)

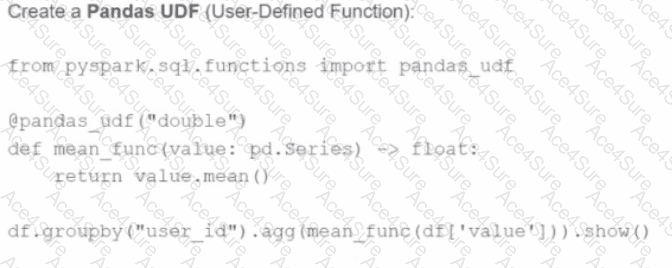

C)

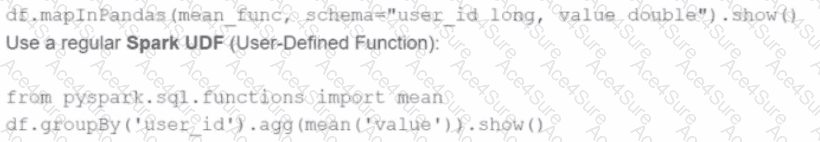

D)