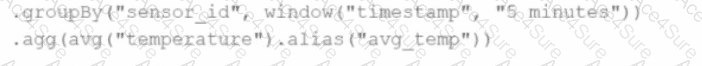

The correct answer is D because it uses proper time-based window aggregation along with watermarking, which is the required pattern in Spark Structured Streaming for time-based aggregations over event-time data.

From the Spark 3.5 documentation on structured streaming:

"You can define sliding windows on event-time columns, and use groupBy along with window() to compute aggregates over those windows. To deal with late data, you use withWatermark() to specify how late data is allowed to arrive."

(Source: Structured Streaming Programming Guide)

In option D, the use of:

python

CopyEdit

groupBy("sensor_id", window("timestamp", "5 minutes"))

agg(avg("temperature").alias("avg_temp"))

ensures that for each sensor_id, the average temperature is calculated over 5-minute event-time windows. To complete the logic, it is assumed that withWatermark("timestamp", "5 minutes") is used earlier in the pipeline to handle late events.

Explanation of why other options are incorrect:

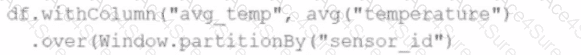

Option A uses Window.partitionBy which applies to static DataFrames or batch queries and is not suitable for streaming aggregations.

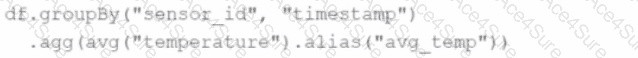

Option B does not apply a time window, thus does not compute the rolling average over 5 minutes.

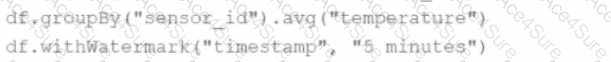

Option C incorrectly applies withWatermark() after an aggregation and does not include any time window, thus missing the time-based grouping required.

Therefore, Option D is the only one that meets all requirements for computing a time-windowed streaming aggregation.