In the Snowflake ecosystem, Parquet is treated as a semi-structured data format. When you stage a Parquet file, Snowflake does not automatically parse it into multiple columns like it might with a flat CSV file. Instead, the entire content of a single row or record is loaded into a single VARIANT column, which is referenced in SQL using the positional notation $1.

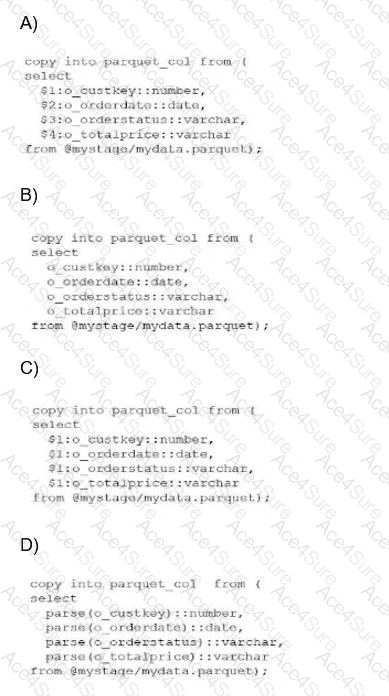

The fundamental mistake often made—and represented in Option A—is treating Parquet as a delimited format where $1, $2, and $3 refer to different columns. In Parquet ingestion, columns $2 and beyond will return NULL because the schema is contained within the object in $1.

To successfully "shred" or flatten this semi-structured data into a relational table with separate columns, an analyst must use path notation. This involves referencing the root object ($1), followed by a colon (:), and then the specific element key (e.g., $1:o_custkey). Furthermore, because the values extracted from a Variant are technically still Variants, they must be explicitly cast to the correct data type using the double-colon syntax (e.g., ::number, ::date) to ensure they land in the target table with the correct data types.

Evaluating the Options:

Option A is incorrect because it uses positional references ($2, $3, etc.) which are only valid for structured files like CSVs.

Option B is incorrect because it attempts to reference keys directly without the required stage variable ($1) and colon separator.

Option D is incorrect as it uses a non-standard parse() function that does not exist for this purpose in Snowflake SQL.

Option C is the 100% correct syntax. It correctly identifies that the Parquet data resides in $1, utilizes the colon to access internal keys, and applies the necessary type casting. This specific method is known as "Transformation During Ingestion" and is a core competency for any SnowPro Advanced Data Analyst.